- Topic1/3

15k Popularity

35k Popularity

18k Popularity

6k Popularity

172k Popularity

- Pin

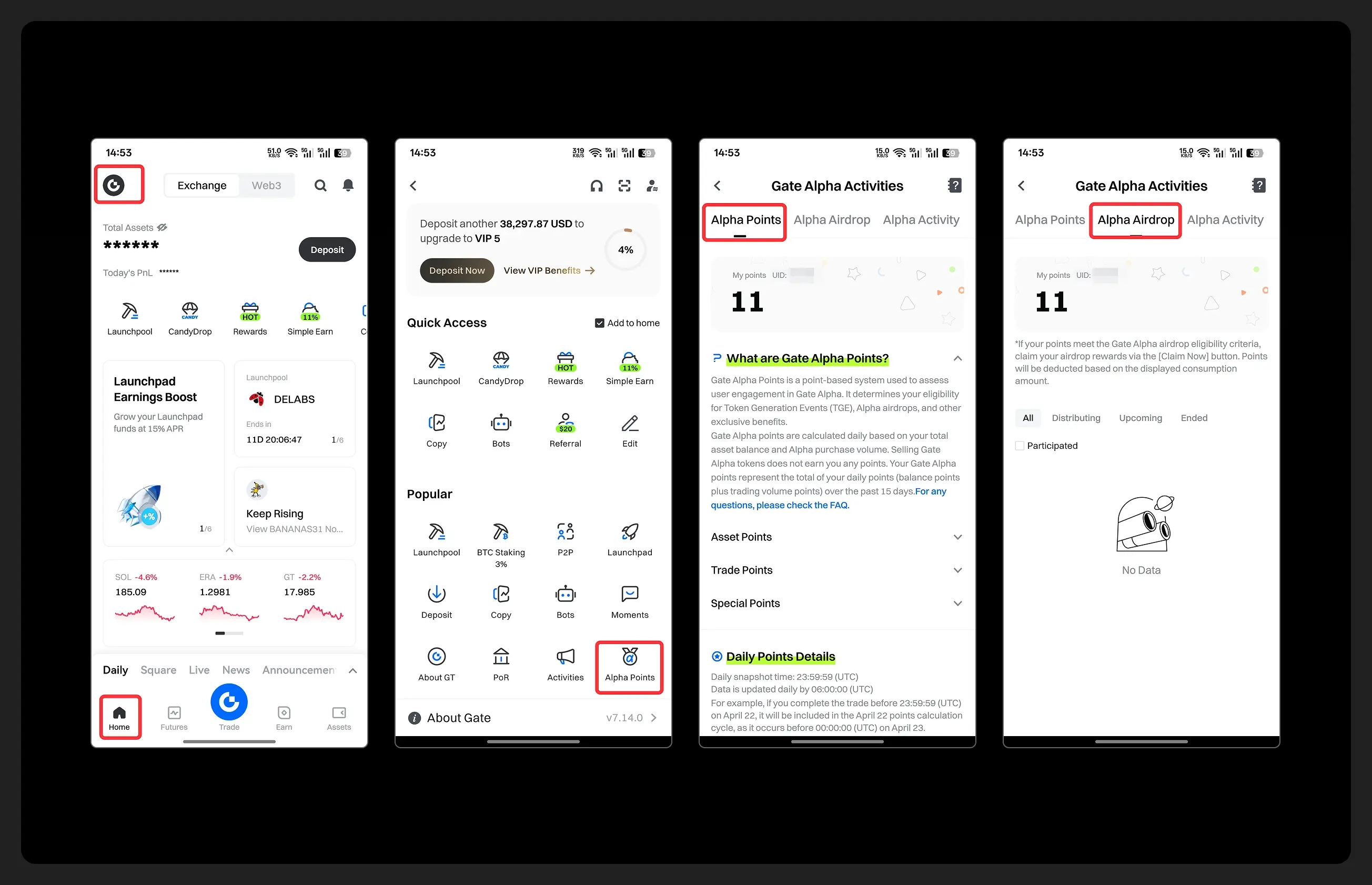

- Hey fam—did you join yesterday’s [Show Your Alpha Points] event? Still not sure how to post your screenshot? No worries, here’s a super easy guide to help you win your share of the $200 mystery box prize!

📸 posting guide:

1️⃣ Open app and tap your [Avatar] on the homepage

2️⃣ Go to [Alpha Points] in the sidebar

3️⃣ You’ll see your latest points and airdrop status on this page!

👇 Step-by-step images attached—save it for later so you can post anytime!

🎁 Post your screenshot now with #ShowMyAlphaPoints# for a chance to win a share of $200 in prizes!

⚡ Airdrop reminder: Gate Alpha ES airdrop is

- Gate Futures Trading Incentive Program is Live! Zero Barries to Share 50,000 ERA

Start trading and earn rewards — the more you trade, the more you earn!

New users enjoy a 20% bonus!

Join now:https://www.gate.com/campaigns/1692?pid=X&ch=NGhnNGTf

Event details: https://www.gate.com/announcements/article/46429

- Hey Square fam! How many Alpha points have you racked up lately?

Did you get your airdrop? We’ve also got extra perks for you on Gate Square!

🎁 Show off your Alpha points gains, and you’ll get a shot at a $200U Mystery Box reward!

🥇 1 user with the highest points screenshot → $100U Mystery Box

✨ Top 5 sharers with quality posts → $20U Mystery Box each

📍【How to Join】

1️⃣ Make a post with the hashtag #ShowMyAlphaPoints#

2️⃣ Share a screenshot of your Alpha points, plus a one-liner: “I earned ____ with Gate Alpha. So worth it!”

👉 Bonus: Share your tips for earning points, redemption experienc

- 🎉 The #CandyDrop Futures Challenge is live — join now to share a 6 BTC prize pool!

📢 Post your futures trading experience on Gate Square with the event hashtag — $25 × 20 rewards are waiting!

🎁 $500 in futures trial vouchers up for grabs — 20 standout posts will win!

📅 Event Period: August 1, 2025, 15:00 – August 15, 2025, 19:00 (UTC+8)

👉 Event Link: https://www.gate.com/candy-drop/detail/BTC-98

Dare to trade. Dare to win.

Aptos introduces the Shoal framework, significantly drops Bullshark latency and eliminates timeout requirements.

Reducing Bullshark Latency on Aptos: An Overview of the Shoal Framework

Aptos labs has addressed two important open problems in DAG BFT, significantly reducing latency and eliminating the need for pauses in deterministic practical protocols for the first time. Overall, the latency improvement of Bullshark is 40% in fault-free situations and 80% in fault situations.

The Shoal framework enhances the Narwhal-based consensus protocol ( with a pipeline and leader reputation mechanism, such as DAG-Rider, Tusk, Bullshark ). The pipeline introduces an anchor point in each round to reduce DAG sorting latency, while the leader reputation ensures that anchor points are associated with the fastest validating nodes, further improving latency. Additionally, leader reputation allows Shoal to leverage asynchronous DAG construction to eliminate timeouts in all scenarios, achieving a property of universal responsiveness.

The technology of Shoal is very simple, running multiple instances of the underlying protocol in order. When instantiated with Bullshark, it is like a group of "sharks" in a relay race.

Background

In the pursuit of high performance in blockchain networks, there has been a continuous focus on reducing communication complexity; however, this has not led to a significant increase in throughput. For example, the Hotstuff implemented in early Diem only achieved 3500 TPS, far below the target of 100k+ TPS.

Recent breakthroughs stem from the realization that data propagation is the main bottleneck based on leader protocols, which can benefit from parallelization. The Narwhal system separates data propagation from core consensus logic, allowing all validators to propagate data simultaneously, while the consensus component only sorts a small amount of metadata. The Narwhal paper reported a throughput of 160,000 TPS.

Aptos previously introduced Quorum Store, which is the Narwhal implementation that separates data propagation from consensus and is used to scale the current consensus protocol Jolteon. Jolteon combines Tendermint's linear fast path with a PBFT-style view change, reducing Hotstuff latency by 33%. However, leader-based consensus protocols cannot fully leverage Narwhal's throughput potential.

Therefore, Aptos decided to deploy Bullshark, a zero-communication-overhead consensus protocol, on top of the Narwhal DAG. Unfortunately, the DAG structure that supports Bullshark's high throughput incurs a 50% latency cost.

This article introduces how Shoal significantly reduces Bullshark latency.

DAG-BFT Background

Each vertex in the Narwhal DAG is associated with a round. To enter round r, a validator must obtain n-f vertices from round r-1. Each validator can broadcast one vertex per round, and each vertex must reference at least n-f vertices from the previous round. Due to network asynchrony, different validators may observe different local views of the DAG.

A key property of DAG is that it is unambiguous: if two validating nodes have the same vertex v in their local DAG view, then they have exactly the same causal history for v.

General Sequence Sorting

Consensus can be reached on the total order of all vertices in the DAG without any additional communication overhead. Validators in DAG-Rider, Tusk, and Bullshark interpret the DAG structure as a consensus protocol, with vertices representing proposals and edges representing votes.

Although the logic of group intersection in DAG structure is different, all consensus protocols based on Narwhal have the following structure:

Anchor Point: Every few rounds, there is a pre-determined leader, whose peak is called the anchor point.

Sorting Anchors: Validators independently but deterministically decide which anchors to sort and which to skip.

Causal History Ordering: Validators process the ordered anchor point list one by one, sorting the previously unordered vertices in the causal history of each anchor point.

The key to satisfying security is to ensure that the ordered anchor point list created by all honest validating nodes shares the same prefix in step (2). In Shoal, we observe that:

All validators agree on the first ordered anchor point.

Bullshark Delay

The delay of Bullshark depends on the number of rounds between ordered anchors in the DAG. The delay of some synchronized versions is better than that of asynchronous versions, but it is still not optimal.

Question 1: Average block delay. In Bullshark, there is an anchor point in every even round, and the vertices in odd rounds are interpreted as votes. In common cases, two rounds of DAG are needed to sort the anchor points, but the vertices in the causal history of the anchor points require more rounds to wait for the anchor points to be sorted. In common cases, vertices in odd rounds need three rounds, while non-anchor point vertices in even rounds need four rounds.

Question 2: Delay in fault situation. If a round leader fails to timely broadcast the anchor point, it cannot be sorted, and ( is skipped ). All unsorted vertices from previous rounds must wait for the next anchor point to be sorted. This significantly reduces the performance of the geo-replication network, especially since Bullshark uses timeout to wait for the leader.

Shoal Framework

Shoal enhances Bullshark ( or any Narwhal-based BFT protocol ) through a pipeline, allowing for an anchor point in each round, reducing the latency of all non-anchor vertices in the DAG to three rounds. Shoal also introduces a zero-cost leader reputation mechanism in the DAG, favoring the selection of fast leaders.

Challenge

In the DAG protocol, pipeline and leader reputation are considered difficult issues for the following reasons:

Previous attempts to modify the core Bullshark logic in the pipeline seem to be fundamentally impossible.

The leader's credibility is introduced in DiemBFT and formalized in Carousel, dynamically selecting future leaders based on the past performance of validators ( anchors in Bullshark ). While leader identity divergence does not violate the security of these protocols, it may lead to completely different ordering in Bullshark, raising the core issue: dynamically and deterministically selecting wheel anchors is necessary for achieving consensus, and validators need to reach an agreement on the ordered history to select future anchors.

As evidence of the difficulty of the issue, the implementation of Bullshark ( does not support these features in the current production environment, including ).

Agreement

Despite the challenges mentioned above, the solution lies in simplicity.

Shoal relies on the ability to perform local computations on the DAG to achieve the capability of preserving and reinterpreting information from previous rounds. Based on the insight that all validators agree on the first ordered anchor point, Shoal sequentially combines multiple Bullshark instances for pipelining, making ( the switching point of the instances at the first ordered anchor point, and ) the causal history of the anchor point is used to calculate the leader's reputation.

( assembly line

V that maps rounds to leaders. Shoal runs Bullshark instances sequentially, with the anchor for each instance predetermined by the mapping F. Each instance sorts one anchor, triggering the switch to the next instance.

Initially, Shoal launched the first instance of Bullshark in the first round of DAG, running until the first ordered anchor point ) was determined as the r-th round ###. All validators agreed on this anchor point, thus it can be confidently agreed to reinterpret the DAG from the r+1 round. Shoal launched a new Bullshark instance in the r+1 round.

In the best case, this allows Shoal to sort one anchor per round. The first round anchor is sorted by the first instance. Then, Shoal starts a new instance in the second round, which has its own anchor and is sorted by that instance, and then another new instance sorts the anchor in the third round, and so on.

( Leader Reputation

When Bullshark sorting skips an anchor point, the delay increases. In this case, the pipeline is powerless, as a new instance cannot be launched before the sorting anchor point of the previous instance. Shoal assigns scores to each validation node through a reputation mechanism, ensuring that leaders likely to handle lost anchor points are less likely to be chosen based on their recent activity history. Validators who respond to and participate in the protocol receive high scores; otherwise, low scores are allocated. ) may crash, slow down, or act maliciously ###.

The idea is to deterministically recalculate the predefined mapping F from rounds to leaders, biased towards high-scoring leaders, each time the score is updated. To reach consensus on the new mapping, validators should reach consensus on the scores, thereby achieving consensus on the history used to derive the scores.

In Shoal, the pipeline and leader reputation are naturally combined because they use the same core technology: reinterpreting the DAG after reaching consensus on the first ordered anchor point.

The only difference is that after the r-th round sorting anchor points, the validators calculate a new mapping F' starting from the (r+1)-th round based on the causal history of the ordered anchor points in the r-th round. Then, the validating nodes execute a new instance of Bullshark using the updated anchor selection function F' starting from the (r+1)-th round.

( No more timeouts needed

Timeout is critical in all leader-based deterministic partial synchronous BFT implementations. However, the complexity they introduce increases the number of internal states that need to be managed and observed, which adds complexity to the debugging process and requires more observability techniques.

Timeouts also significantly increase latency, as it is important to configure them properly, often requiring dynamic adjustments, and highly dependent on the environment ) network ###. Before transitioning to the next leader, the protocol pays the full timeout delay penalty for a faulty leader. Therefore, the timeout settings cannot be overly conservative, but if they are too short, the protocol may skip good leaders. For instance, we observed that under high load, the leaders in Jolteon/Hotstuff were overwhelmed, with timeouts expiring before progress could be made.

Unfortunately, leader-based protocols like Hotstuff and Jolteon ( essentially require timeouts to ensure that the protocol can make progress whenever a leader fails. Without timeouts, even a crashed leader may stop the protocol indefinitely. Since it is impossible to distinguish between faulty and slow leaders during asynchronous periods, timeouts may cause validating nodes to view changes to all leaders without consensus activity.

In Bullshark, timeouts are used for DAG construction to ensure that during synchronization, honest leaders add anchor points to the DAG at a fast enough rate to allow them to be sorted.

We observe that the DAG construction provides a "clock" for estimating network speed. As long as n-f honest validators continue to add vertices to the DAG without pauses, the rounds will continue to progress. Although Bullshark may not be able to sort at network speed ) due to leader issues (, the DAG still grows at network speed, even though some leaders have problems or the network is asynchronous. Ultimately, when fault-free leaders broadcast anchors quickly enough, the entire causal history of the anchors will be sorted.

During the evaluation, we compared whether Bullshark had any timeouts under the following conditions:

Fast leaders, at least faster than other validators. In this case, both methods provide the same latency, as the anchors are ordered and do not use timeouts.

Erroneous leaders, in this case, the non-pausing Bullshark provides better latency, as the verifying nodes will immediately skip their anchor points, while the pausing validators will wait for them to expire before continuing.

Slow leaders, this is the only case where Bullshark outperforms in terms of timeout performance. Because without pauses, the anchor may be skipped as the leader cannot broadcast it fast enough, while with pauses, validators will wait for the anchor.

In Shoal, avoiding timeouts is closely related to leader reputation. Repeated waiting.